An interview with Dr. Christopher Zugates, Application Engineer at arivis AG, discussing a revolutionary way of analyzing complex 3D images… through virtual reality!

What are the main challenges that scientists face when it comes to analyzing 3D microscopic images?

One of the biggest challenges in 3D microscopy is the size and complexity of the images that we are now able to capture. Advancements in technology mean that not only are we capturing more information about a sample, we are also able to carry out more analysis using that information.

The first challenge with such advanced microscopes is working out how to examine a very complex image in the first place! When you have massive datasets, massive volumes or 3D movements, how should we see these data, and how can we explore it?

Researchers are now having to decide how to best analyze and present data on complex 3D images, and share this with the scientific community.

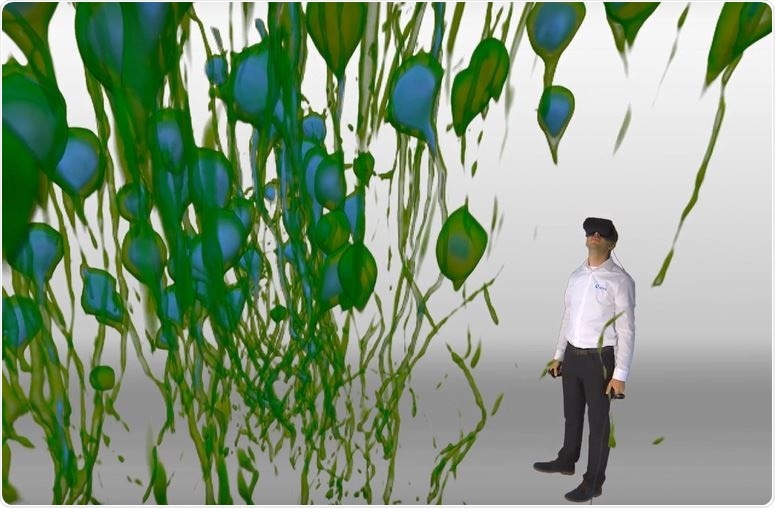

Image Courtesy: Dr. Christopher Zugates, arivis AG

Image Courtesy: Dr. Christopher Zugates, arivis AG

What is InViewR?

InViewR is an application that enables researchers to put on a commercial virtual reality headset and be immersed in 3D microscopic images. Instead of seeing a 3D image on a 2D computer screen, data are presented to you as objects in your vicinity that you can reach out and touch. This enables you to manipulate the data in ways that you wouldn’t have been able to do before.

Speaking from my own research experience, I was able to see an object in a faraway corner of the image and reach out and touch it right away. There's almost no feeling around. My brain already knew to move my hand at a certain distance. I could move the structures around, rotate them, and observe them from all different angles. I could even put something behind me and reach back, without looking, grab it and pull it in front of me again!

With a flat-screen, you are losing touch with reality. You started off with real, three-dimensional materials and carried out, for example, immunohistochemistry, then did the imaging and suddenly, it looks nothing like the raw materials. In the VR headset, the material becomes real again. I think this is an advantage of InViewR and VR-based rendering of raw 3D data in general.

In the VR headset, you can interact with the material again. You can turn it, go inside of it, do cross-sections with your hands and most importantly, carry out highly accurate measurements in an efficient and comfortable way.

This is particularly useful for neuron tracing. It’s a tool that’s mapped to your hand so when you reach out and touch a neuron, the tool recognizes this and measures the distance and diameter as you’re moving down the axon.

It enables you to cooperate with an algorithm to find the center point of an axon and trace it. It’s a world away from observing images on a flat-screen yet much more like working naturally with your hands as you do in the lab.

How can VR transform the way that researchers analyze and present data?

There are definitely efficiencies to be gained by doing things in virtual reality. Manipulating and analyzing images on a computer screen is doable, but it would be far more efficient to just reach out and touch the image, draw an object, or measure a certain distance between objects.

Another place where virtual reality will be important is sharing the significance of your work. As scientists, we typically present our data to each other using PowerPoint presentations, which is a 2D format. We are always trying to explain what we saw through words or 2D images. What we at arivis have noticed over the years is that VR is a superb way to tell the story. Scientists are able to share their work by putting a colleague into their dataset and pin-pointing the areas of significance.

This could also be useful for teaching labs. When I started out in graduate school, I was training as a neurobiologist. A lot of my early training involved reading papers that other people had written, and this learning process carries on for a lifetime. There is a huge gap between reading these papers which display 2D images and actually having the material in your hand. VR could be a bridge for education, where you can show people the structures of the tissues and the organs outside of the laboratory.

One of the biggest advantages for scientists will be analyzing images where there is a lot of intermingling of structures. For example, if you want to observe a neuron that’s going from one plane to another, and it’s crisscrossing with lots of other neurons in its path. We want to observe each individual neuron, as there's something special about each one of these structures, and we want to understand their properties individually. In order to do this, we need to untangle each neuron and pull them apart from each other.

Unless an algorithm is smart enough to be looking at the structural flow and continuity over long distances, it wouldn't be able to separate neurons that are very close together. Using VR, you can see the texture of the neuron and your brain can distinguish one structure from another, even when the neurons are touching and crossing over. This is immensely powerful for data analysis and allows you to pick away at a vast amount of information.

It’s the same with living cells. We worked with a customer who labels vesicles that move rapidly around a cell. Using the VR headset, I can sit inside one of the cells and see the vesicles moving around. It’s like my brain is filling in the gaps; I can easily see that this vesicle jumped from here to there, to there, and I can trace it.

I can put my finger on it and track its trajectory around the cell. I’m seeing vesicles come into my peripheral vision that crosses my visual field and move onto the other side. This is easy for you to do if you can see it, but can be relatively difficult to solve on a computer.

Why did you feel it was important to develop technology that allows scientists to “walk around” their data?

Four years ago, we were exploring the use of virtual reality in research for fun! We brought our first VR system to the Society for Neuroscience annual conference and hundreds of people came to put the headsets on, all of them flying around inside the images.

After the show, we started to look at how we could convert the VR experience from something fun, into something scientifically useful. We looked at every aspect of the problem, from storytelling to sharing data, and even collaborative VR where two people can be in there at the same time.

We then thought, if it’s easy to just reach out and touch an object, maybe we should add painting or sculpting options to help researchers with segmentation issues. Lots of researchers had told us that they wanted to be able to mark dendritic spines on a neuron easily. We realized that if we made the workflow very easy, where you just click a button and you're instantly marking the object, then it could be a very efficient way of doing this common task.

We also made easy ways to measure the 3D distance between points and to do freehand 3D sculpting on images. Now we are combining these tools with machine intelligence to make the results more and more accurate. That’s why this year, we released our new smart neuron tracing tool.

Along with this, took lots of different tools and tricks and started to package them into workflows. We’re now starting to see people connect with VR as a tool for analysis instead of just a fun thing to do.

Which fields of research has the InViewR been designed for?

At first, we didn’t think about which fields we were trying to address, we just wanted to make 3D data accessible and easy to analyze. We later started developing workflows for neuroscientists, because scientists in these fields really push the limits on the size and information content stored within an image.

Imaging complex neuronal tissue requires high spatial and temporal resolution, which is why they are very amenable to a VR analysis. You can see individual structures in VR that would otherwise appear as an indiscernible tangle of neurons on a 2D screen.

With a very dense image, you might need to turn the image in just the right way to see something in a cross-section, and on a screen with a mouse, this can be difficult and frustrating. Instead of clicking and trying to move a cutting plane with a mouse and keyboard, in the VR environment you just need to move your head naturally! You can, of course, rotate the dataset, but you can also make adjustments with your head to get the perfect view.

What are the main differences between VR and visualizing results on a 2D computer screen?

If you think about your physical environment, say you're in your house or your office, there's a certain way that you have the room physically organized. You have this here and this there, and this there. Somehow, it's very easy for your brain to remember where your tools and materials are.

This means you can organize and work in the space in a really efficient way. This is comparable to the VR environment. You've got this object in front of you that you can touch and efficiently work with. I can go back to this place. I can go over to this place. I can draw a line from A to B to C to D very, very easily, because my brain perceives it to be a real object.

How does the technology help scientists to correct segmentation issues?

Everybody wants to take measurements from their images. Doing it on a two-dimensional screen where you are drawing plane by plane can be very, very accurate but can also be time-consuming and laborious. In the VR environment, much more information about where there are segmentation errors is conveyed to the user at once and they can efficiency move to these and correct them.

You’re hunched over a computer screen for hours at a time, trying to manipulate the image. With virtual reality, you’re standing straight up or sitting, and extending your hands in the way that you use them naturally. It cuts out the need for keyboards and mice, making the task less damaging to the joints and musculature. If you work fast, then it can even be a good workout.

Tell us more about the use of the technology. Does it work with any 3D imaging system?

Yes! We take images from any 3D, or any 3D over time modality, and try to make that process as easy as we can for our users. If you look inside of our software, there's a long list of different file types that we support. We try to make that as easy as we possibly can.

In most cases, it’s simply a drag and drop exercise. You just drag the file onto the desktop, the software imports it into the VR headset and you’re ready to go.

Image Credit: arivis AG

Image Credit: arivis AG

What role will virtual reality play in the future?

I think we will see scientists using virtual reality more and more. I also think that we will start to move into augmented reality, or AR, as the technology evolves. Working in the virtual environment will catch fire as headsets will become more comfortable and more accessible.

The way that we use our hands in the VR environment is likely to get better too. At the moment, we are using controllers with some buttons, but there are lots of promising technologies that will enable people to use their hands. They are incorporating things like not just vibrational feedback, but pressure feedback and thermal feedback. There are all these other dimensions that can be added to where maybe the data is going to feel like a real object.

I don't know if we would be permanently working in the VR world. We developed our VR tool in a way that the tasks that you do in there are very specialized. We don't expect you to put the headset on and sit in there all day and do all of your work. Right now, we are using VR to solve special problems.

There are still lots of tasks that you will want to do in a traditional way on the computer. One of the special things about our VR application is that it fits with all of our other applications. We have an application that's very feature-rich, a traditional image visualization and analysis package that's running on your laptop or your desktop, then we have some server solutions as well.

When you want to do big, batch analysis, with lots of imaging jobs, you can do it in that environment as well. But all of these tools are connected. Anything you do in the VR environment can be seen on a computer, and vice versa.

Where can readers find more information?

About Dr. Christopher Zugates

Dr. Christopher Zugates has been working at the confluence of Science, Teamwork, and Multi-Dimensional Imaging for the last two decades. As an arivis AG Application Engineer, he helps the world’s most talented scientists leverage new scalable Imaging Science tools

Dr. Christopher Zugates has been working at the confluence of Science, Teamwork, and Multi-Dimensional Imaging for the last two decades. As an arivis AG Application Engineer, he helps the world’s most talented scientists leverage new scalable Imaging Science tools