In a recent study published in the journal Scientific Reports, researchers in the United States and the Netherlands used a long-term brain-computer interface (BCI) implant to synthesize intelligible words from brain activity in a patient with amyotrophic lateral sclerosis (ALS). They found that 80% of the synthesized words could be correctly recognized by human listeners, demonstrating the feasibility of speech synthesis using the BCI in ALS patients.

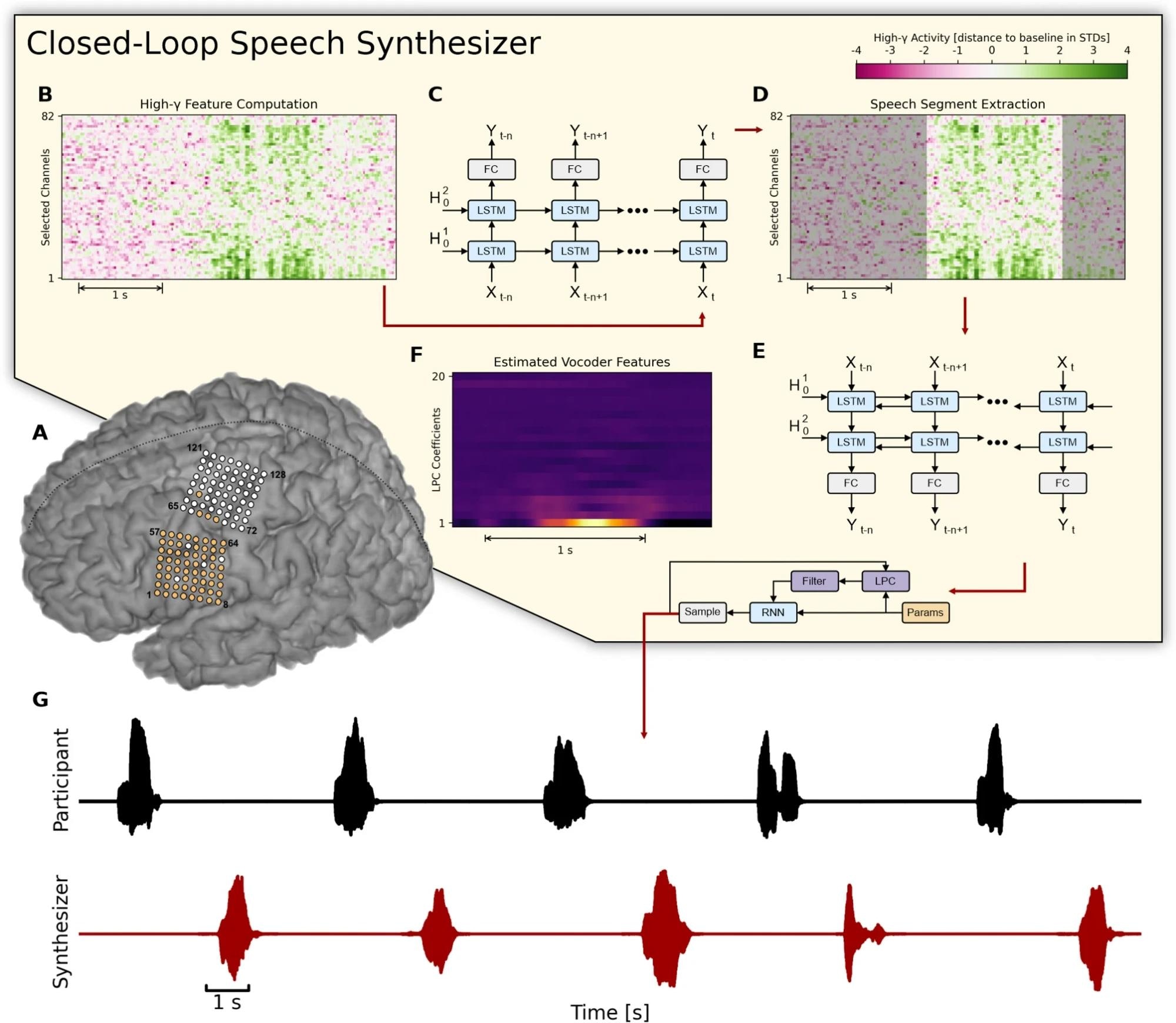

Overview of the closed-loop speech synthesizer. (A) Neural activity is acquired from a subset of 64 electrodes (highlighted in orange) from two 8 × 8 ECoG electrode arrays covering sensorimotor areas for face and tongue, and for upper limb regions. (B) The closed-loop speech synthesizer extracts high-gamma features to reveal speech-related neural correlates of attempted speech production and propagates each frame to a neural voice activity detection (nVAD) model (C) that identifies and extracts speech segments (D). When the participant finishes speaking a word, the nVAD model forwards the high-gamma activity of the whole extracted sequence to a bidirectional decoding model (E) which estimates acoustic features (F) that can be transformed into an acoustic speech signal. (G) The synthesized speech is played back as acoustic feedback. Study: Online speech synthesis using a chronically implanted brain–computer interface in an individual with ALS

Overview of the closed-loop speech synthesizer. (A) Neural activity is acquired from a subset of 64 electrodes (highlighted in orange) from two 8 × 8 ECoG electrode arrays covering sensorimotor areas for face and tongue, and for upper limb regions. (B) The closed-loop speech synthesizer extracts high-gamma features to reveal speech-related neural correlates of attempted speech production and propagates each frame to a neural voice activity detection (nVAD) model (C) that identifies and extracts speech segments (D). When the participant finishes speaking a word, the nVAD model forwards the high-gamma activity of the whole extracted sequence to a bidirectional decoding model (E) which estimates acoustic features (F) that can be transformed into an acoustic speech signal. (G) The synthesized speech is played back as acoustic feedback. Study: Online speech synthesis using a chronically implanted brain–computer interface in an individual with ALS

Background

Neurological disorders like ALS can impair speech production, leading to communication challenges, including Locked-In Syndrome (LIS). Augmentative and alternative technologies (AAT) offer limited solutions, prompting research into implantable BCIs for direct brain control. Studies have aimed at decoding attempted speech from brain activity, with recent advances in reconstructing text and acoustic speech. While initial studies focused on individuals with intact speech, recent research has extended to those with motor speech impairments like ALS. Implantable BCIs and non-invasive methods such as electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) have been explored for speech decoding. However, the latter faces limitations in resolution and practicality. These advancements promise to improve communication for individuals with speech impairments, but challenges remain in translating findings to real-world applications and addressing the practical limitations of non-invasive BCIs. Therefore, in the present study, researchers demonstrated a self-paced BCI that translated brain activity into audible speech resembling the user's voice profile for an individual with ALS participating in a clinical trial.

About the study

The study involved a male ALS patient in his 60s enrolled in a clinical trial and implanted with subdural electrodes and a Neuroport pedestal. Data were recorded via a biopotential signal processor, capturing neural signals and acoustic speech.

Speech synthesis was achieved by decoding electrocorticographic (ECoG) signals recorded during overt speech production from cortical regions associated with articulation and phonation. The participant's significant impairments in articulation and phonation were addressed by focusing on a closed vocabulary of six keywords, which he could produce individually with high intelligibility. Training data were acquired over six weeks, and the BCI was deployed in closed-loop sessions for real-time speech synthesis. Delayed auditory feedback was provided to accommodate the ongoing deterioration in the patient's speech due to ALS.

The pipeline for synthesizing acoustic speech from neural signals involved three recurrent neural networks (RNNs) to identify and buffer speech-related neural activity, transform neural activity sequences into an intermediate acoustic representation, and recover the acoustic waveform using a vocoder. The RNNs were trained to detect neural voice activity and map high-gamma features onto cepstral and pitch parameters, which were then converted into acoustic speech signals. Statistical analyses, including Pearson correlation coefficients and permutation tests, validated the accuracy and reliability of the synthesized speech.

Results and discussion

The speech-synthesis BCI could effectively recreate the participant's speech during online decoding sessions. The synthesized speech was found to align well with the natural speech in timing, preserving important speech features, including phoneme and formant-specific information (correlation score 0.67). Across three sessions, about five and a half months after training, the system was found to continue to perform consistently.

In listening tests with 21 native English speakers, synthesized words were correctly identified with 80% accuracy, except for occasional confusion between similar words like "Back" and "Left." Individual listener accuracy ranged from 75% to 84%. The synthesized words, designed for intuitive command and control, demonstrated promising intelligibility despite some challenges in discriminating similar words. In contrast, listeners recognized most of the samples of the participant's natural speech with high accuracy (99.8%).

Further analysis identified the brain areas important for recognizing speech segments. It was found that a wide network of electrodes in motor, premotor, and somatosensory cortices played a significant role, while the dorsal laryngeal area, the part of the brain linked to voice activity, only had a mild impact on speech recognition. The study showed that neural activity during speech planning and phonological processing was crucial for predicting speech onset. Interestingly, relevance scores over time prior to predicted speech onset showed a decline after -200 ms, possibly indicating that voice activity information was already stored in the model's memory by that point, reducing the effect of further neural activity changes. Overall, the analysis shed light on spatiotemporal dynamics underlying the model's speech-identification process.

Conclusion

In conclusion, the present study highlights the potential for chronically implanted BCI technology to provide a means of communication for individuals with ALS and similar conditions as their speech deteriorates. The stability of the model encourages the use of ECoG as a foundation for speech BCIs. The study offers hope for improved quality of life and autonomy for those living with conditions like ALS.