In this interview, Dr. Andrew Webb from the Walter and Eliza Hall Institute (WEHI) describes how his laboratory is using mass spectrometry-based techniques to understand the role of post-translational modifications in human disease.

What areas do you focus on in your laboratory at the Walter and Eliza Hall Institute?

We are one of the leading medical research institutes in Australia. I run the proteome platform technology laboratory. As an institute, most of our research focuses on cancer, immune disorders, and infectious disease. More recently, however, our research focus has grown to include healthy development and aging.

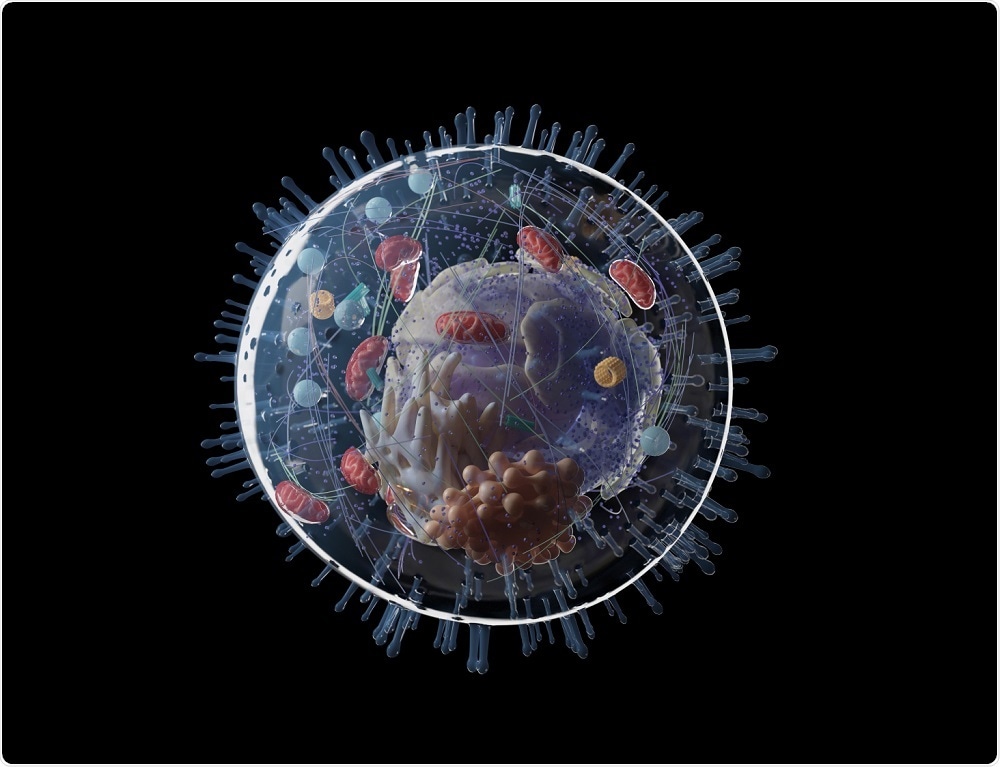

Image Credit: SciePro / Shutterstock.com

Image Credit: SciePro / Shutterstock.com

The institute has 93 laboratories with more than 1,000 staff, and covers a wide spectrum of diseases. The number of staff means that we must have a reasonably high throughput. With so many staff, it was important to think about our infrastructure and how we could maximize our outputs.

One of the things that has emerged over time in my laboratory is the idea that we need to be detecting proteoforms; the forms of proteins that exist when you take into account post-translational modifications (PTMs) and other post-translational effects.

We are heavily invested in understanding the physiological roles of proteoforms and developing techniques to better detect them. At the moment, we are using mass spectrometers, but we know that we're only just seeing the tip of the iceberg. Whilst these instruments are incredibly sensitive, the dynamic range is the main limiting factor, as we know that the dynamic range that exists within cells and within many of the biofluids that we analyze is much larger than what we can detect.

Why is it important to understand the effects of post-translational modifications (PTMs)?

Most medical research labs use bottom-up proteomics and are generally focused on using this in a gene-centric fashion (protein base quantitation). In our lab, as we work with a lot of cell signaling groups, a lot of the proteins that we work with are modified post-translationally and it is important for us to monitor these PTM changes under different conditions. We generally think of PTMs in the context of the protein, which is embodied in this idea of ‘proteoforms’, where the molecule we are really interested in the functional version of the chemically modified gene product.

Whist we can detect around 11,000 to 13,000 gene products that are expressed in any cell at a given point in time, post-translational modifications can occur through RNA editing or coding for different SNPs, transcriptional regulation, whether it’s a truncation, cleavage by proteases, or chemical modification such as phosphorylation. There are also more than 107 known endogenous post-translational modifications. The complexity of what could exist in these protein samples is astronomical. Having said this, I anticipate that this is probably in the region of about 10 million proteoforms for a given cell at any point in time.

In my laboratory, our goal is to dig deep and focus on as many of these PTM based proteoforms as possible. If we can make measurements at a very deep level, I believe we can start correlating post-translationally modified proteins with certain phenotypes. This is as close as it gets to understanding why we see differences in phenotypes.

What is the best approach for analyzing proteoforms?

One of the best approaches for looking at these proteoforms and the different forms of proteins that can exist is analyzing intact proteins. This is something that we worked on a few years ago when we developed a method to clean up proteins in a very high-throughput and efficient way.

We use a paramagnetic bead as a nucleation reagent to precipitate our proteoforms. If you're familiar with the resin-based SP3 approach, this is a take on that but with an additional elution step. To do this, we took some knowledge from the protein aggregation field and incorporated formic acid to extract cleaned proteins in a very efficient and unbiased way. When you do this, you end up with a very robust and highly reproducible way of releasing intact proteins for MS analysis.

The MS1 signal from the LC separation provides a measure of all the intact proteins that we can detect in a single shotgun. On average, a single shot, we can detect about 2,000 different forms of proteins, revealing an incredible array of gene product heterogeneity.

We have found that, on average proteins exist in about eight different forms (PTM forms). From this, when using bottom-up proteomics, we know what we are looking at is this the very top-of-the-iceberg snapshot of the proteins that really exist. We know there is much more functional heterogeneity within the protein forms than we currently appreciate in the field.

If there were ways to dig deeper into this, it would be fantastic! We know that this is just the beginning because when you think about analyzing intact proteins, the signal intensity is very distributed through isotopes and through the charge states, which means that our dynamic range is limited and we have hard limitations on our ability to dig deep into the proteome using this approach.

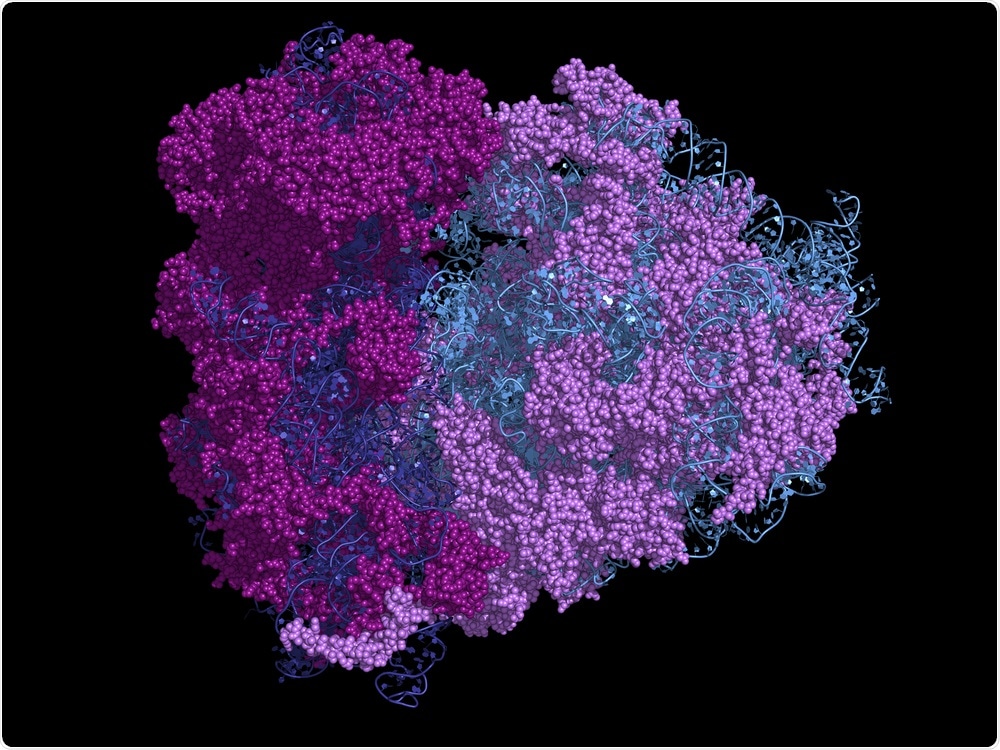

Image Credit: Petarg / Shutterstock.com

Image Credit: Petarg / Shutterstock.com

How can you apply the analysis of proteoforms to the study of human disease states?

In traditional proteomics, when we map peptides back to proteins, we tend to lose a lot of information, tending to remove or ignore outlier peptides during the analysis process. What we try to do now in our research is to maintain these peptides, even to the point of doing peptide level statistics between the different questions that we're asking quantitatively.

When we do this, the instrumentation becomes very important. Over the last four or five years, we have been also been working on experimental design, getting the controls right for a given sample and doing enough replicates to facilitate peptide level quantification. Another important factor here is to think very carefully about the proteome you want to enrich. Imagine that any given proteome is like an iceberg and with MS, you can only see the tip of the iceberg. It then becomes very important to think very carefully about where your signatures and biological information are likely to lie, i.e. what part of the proteome can you enrich to see the signatures you’re trying to identify. There are numerous ways to delve deeply into specific parts of proteomes, particularly if you have hints of where to look from previous work. As an example, one common way to dig into these proteome icebergs is by using PTM enrichment. This can be achieved at either the protein level or peptide level, and is often done for phosphorylation and other cell signaling relate modifications.

In which applications do you see proteomics and mass spectrometry having the most potential?

Proteomics is an incredibly powerful and dynamic discovery tool and its potential is only limited by the researchers creativity. We experimented a lot with experimental design. There are so many different areas in which we can apply these techniques, and for me, one of the most fundamentally exciting areas is exploring how mass spectrometry can aid medical research. I personally have an interest in applying these techniques for drug discovery and understanding fundamental aspects of biology.

At the moment, we're doing a lot of hydrogen-deuterium exchange, interactomics, global quantitative proteomics and cell surface analysis. More recently, however, we have started looking towards the discovery of clinically-relevant biomarkers.

This more recent interest in clinical biomarkers has been driven by the development of the timsTOF Pro, where the unparalleled long-term stability of its signal is essential. We have not yet seen this capability on any other instrument to date, where we can potentially run the instrument for six to 12 months, if not beyond, without anything more than a capillary clean. I truly believe that this is probably one of the most fundamental recent advances in the MS field that is going to have a significant impact on the clinical biomarker space.

Are there areas of investigation in proteomics that would benefit from new methodologies?

I think proteomics is too focused on gene-centric quantitation, using the assumption that a single gene = a single protein in our quantitative analysis. We digest proteins and peptides, identify the spectra, and then work backward from there. What we are really mapping here is genes and not proteoforms. I think the potential to lose information going through traditional gene-centric analysis steps is particularly important for so many of the projects that we work on and we need to be much more proteoform-centric.

In my group we will be continuing to work towards peptide centric based approaches, where representative proteoforms can be measured and quantified by their unique modified peptides.

How does instrumentation affect the success of proteoform studies?

The practical implications of this peptide-focused method is that quantification can be quite challenging. The decision around the type of instrument that you use and the infrastructure that you put around it is therefore very important. Something we noticed several years ago was the Impact II instrument from Bruker. We found it to be incredibly fast and very, very sensitive.

The ion transmission that we saw was incredibly efficient. We saw incredible signals off this instrument that we were impressed with compared to other platforms we were using at the time.

The Impact II was also incredibly robust, allowing us to inject plasma for weeks on end with appropriate sample preparation, but without any degradation of the signal. The research led to Impact II quickly becoming the go-to instrument in our laboratory for longitudinal studies. This instrument provided the means for us to begin developing the peptide centric based approach.

Image Credit: Kateryna Kon / Shutterstock.com

Image Credit: Kateryna Kon / Shutterstock.com

Are there limitations to the extremely sensitive instruments you use in your research?

One of the things that surprised us when we started working with the Impact II was that we had better quantitation. The increased speed and sensitivity of this instrument didn't translate to more identifications. This is something that initially confounded us.

When comparing our existing MS platform with our Impact II, we would identify a lot more features. Even when we ran a shorter gradient, there was a significant increase in measured features. However, this didn't necessarily translate to more identifications. These instruments are so sensitive, so we were seeing many more precursors that co-elute.

We realised that the further down you dig, the more features you see. This is due to an exponential increase of features at the lower level. The more sensitive your instruments are, the more complexity we see within the sample. I believe this places some fundamental limitations on what can be identified within a single analysis of a given sample due to near isobaric co-eluting peptides. This limitation that we've identified is hardware dependant and occurs whether you operate in a data dependent or independent acquisition mode.

On another front, the instrument efficiency of measuring the sample is important too. If you think of samples as a stream of ions coming into the instrument, all of the current analysis modes that exist (including data-dependent (DDA) and data-independent acquisition (DIA) strategies) only use a fraction of the incoming ion beam, and I see this as a significant inefficiency.

In particular, with trapping instruments such as an orbitrap, the trapping time for a high concentration signal beam for nanoflow only traps about a milliseconds worth of ions for a 100msec scan, leaving most ions flowing to waste. Likewise, on Q-TOFs, every time you're doing an isolation event, you are excluding the rest of the ion beam. So, for most traditional MS platforms, most of your time is spent measuring only a very small percentage of you possible ions. Even with data-independent approaches, you’re using a slightly wider part of the ion beam with the quadrupole giving you a slightly bigger chunk for the ion beam, but that still ends up being a small fraction; up to four or five percent of the ion beam at any one time. That's an enormous amount of potential information that you're throwing away to the vacuum and not using at all.

Lastly, another limitation with current independent acquisition strategies is the relatively slow cycle times that are necessary to scan through the entire mass range. It takes quite a long time for the instrument to actually acquire that data in a comprehensive fashion. Your cycle times to get back to that same isolation window to get your next set of data can be anywhere from two to four seconds. Generally, it's closer to four seconds with most data-independent acquisition strategies.

How do you think data acquisition modes can be improved?

With short gradient nanoflow chromatography, the peak widths are well within the four-second cycle window mentioned above, so you will have peaks that come and go before you've had a chance to detect them.

This becomes particularly apparent when you start to think about even shorter gradients to dramatically increase sample throughput. Alternatively, getting in-depth coverage by fractionating samples, but then doing a higher number of shorter gradients is something that we're certainly working towards.

There was an argument made a couple of years ago by Joshua Coon positing that now more than ever proteomics needs a better chromatography. I believe the bigger problem is that we’ve probably reached a state diminishing returns from new instruments, i.e. the better chromatography and better instrument sensitivity really just mean we’re likely to see more near isobaric co-eluting peptides that prevent accurate identifications and precise measurements .

With current instruments, I don't see any way around this. We have looked at this in different ways in terms of trying to tease out identifications from chimeric spectra, but as soon as you start adding multiples of peptides and co-fragmenting them, they become quite challenging. This is still a problem for all the data-independent acquisition modes as well.

However, I do believe that with ion-mobility, adding an orthogonal step of separation to increase the effective resolution of the instrument has a very high potential to help us mitigate this and dig deeper into proteomes.

Image Credit: Urfin / Shutterstock.com

Image Credit: Urfin / Shutterstock.com

Why isn’t ion-mobility (IMS) available on all mass spectrometers?

This comes down to the complexity, ion losses and the inherent lower duty-cycle when you put an IMS device on the front of a mass spectrometer.

For me, the TIMS device is incredibly breakthrough technology and it’s probably been the most exciting instrument development that I’ve seen in my career to date. Its compact size enables it to sit on the front of an instrument, maintain a reasonable footprint that’s still compatible with most laboratories, and it has incredibly stable electronics providing an incredibly robust measurement over time. Also this extra dimension of information increases effective resolution that simplifies the sample complexity issue I referred to earlier, and lastly there are some pretty impressive advantages due to its trapping function too.

The ion losses are going to occur in any IMS where you're trapping and exposing them to a gas flow, but what you get in this new device, the timsTOF Pro, is a tremendous sensitivity gain.

The other significant advantage is that we don't switch off any of the ion beam. It's near 100 percent duty cycle, the accumulation of all the incoming ions has enormous potential, ensuring that we're sampling as much of it as we possibly can.

What are you aiming to achieve by maximizing the number of incoming ions?

Taking advantage of this ability to maximize this incoming ion beam and utilize this orthogonal separation for deeper interrogation of proteomes has certainly started to be developed with other groups that are working on similar approaches. For instance, having the ability to better match peptides and their mobility associated their fragment ions between individual runs is a significant advantage.

Early on, we looked at the instruments ability to fragment everything in the incoming ion stream and see what we could match to MS2 in this process. It was comupationally challenging, but the real advantage of doing this is that in adding many MS2 frames, you're accumulating many more signal for your fragmented ions. It's a much more sensitive way of extracting more MS2 information. dia-PASEF is now leading this approach. One of the things we were impressed with when we started looking at our data was its fidelity and richness of the MS2 fragmentation. Overall, this gives you an amazing signal-to-noise ratio because you're summing many MS2 events and taking the ions that only match your precursor and not taking overlapping ions.

In what other areas do you think your line of work is being accelerated?

The stability of the CCS values is definitely going to facilitate better analytical approaches moving forward. This is very important for us; the ability to be able to rely on these cross-sectional area values for the measurements we're making. They're going to be important for extending this idea of accurate mass tagging, which I'm term accurate 3D tagging. This is crucial, as I believe this will open up the opportunity to develop new tools and software that will allow us to not only dig deep in proteomes but accurately measure the features and the peptides that we need to measure across very large sample cohorts. We're really looking forward to seeing what we can generate in the future.

Will accurate CCS values also help in realizing clinical applications?

Having an accurate CCS measurement is going to be enormously helpful when you have very complex samples. Also its stability will allow us to really increase the accuracy of what we can match run to run. We think this is going to be incredibly important for our clinical applications.

These approaches are really just extending the ideas developed at the PNNL by Richard Smith and his group over the years. They led this idea of accurate mass tagging by using the precursor itself as the matching feature and identifying this across runs. Now that we have a new platform that can enable true 3D matching, it’s exciting to be involved in developing the ideas, algorithms and software from our own laboratory.

Where can readers find more information?

About Dr. Andrew Webb

Andrew Webb has over 15 years of experience in the life sciences and proteomics fields. Andrew joined the Walter and Eliza Hall Institute (WEHI) in 2011 to develop the institute's mass spectrometry and proteomics capacity.

Andrew Webb has over 15 years of experience in the life sciences and proteomics fields. Andrew joined the Walter and Eliza Hall Institute (WEHI) in 2011 to develop the institute's mass spectrometry and proteomics capacity.

Prior to joining WEHI, Andrew was trained in a variety of areas including Biochemistry, Immunology and Virology with a focus on interfacing high end technology with medical research.

Please note: The products discussed here are for research use only. They are not approved for use in clinical diagnostic procedures.

About Bruker Daltonics

Discover new ways to apply mass spectrometry to today’s most pressing analytical challenges. Innovations such as Trapped Ion Mobility (TIMS), smartbeam and scanning lasers for MALDI-MS Imaging that deliver true pixel fidelity, and eXtreme Resolution FTMS (XR) technology capable to reveal Isotopic Fine Structure (IFS) signatures are pushing scientific exploration to new heights. Bruker's mass spectrometry solutions enable scientists to make breakthrough discoveries and gain deeper insights.

Discover new ways to apply mass spectrometry to today’s most pressing analytical challenges. Innovations such as Trapped Ion Mobility (TIMS), smartbeam and scanning lasers for MALDI-MS Imaging that deliver true pixel fidelity, and eXtreme Resolution FTMS (XR) technology capable to reveal Isotopic Fine Structure (IFS) signatures are pushing scientific exploration to new heights. Bruker's mass spectrometry solutions enable scientists to make breakthrough discoveries and gain deeper insights.