Artificial Intelligence (AI) is the simulation of human intelligence processes by machines, especially computer systems. With the increasing computational power of modern hardware and the widespread adoption of smart devices, access to and the adoption of AI is higher than ever. AI has percolated into and revolutionized almost every aspect of modern human society, with machine learning tools seeing widespread utilization in online advertisements, scientific research, and military simulations.

The military sector particularly benefits from AI tools, with the ongoing conflicts between Russia and Ukraine expected to pave the way for autonomous AI-guided weapon systems. Unfortunately, the development of AI tools is rarely accompanied by an ethical evaluation of the system, with collateral damages to non-combatants or friend forces left unconsidered.

“Seeing the rapid emergence of AI and its applications in the military, the United States Department of Defense (DOD) disclosed ethical principles for AI in 2020.”

These principles comprise five critical aspects of AI-centric ethics: responsibility, equitable treatment, traceability, reliability, and governance. The North Atlantic Treaty Organization (NATO) adopted these principles and expanded them to include lawfulness, explainability, and bias mitigation. These ethical introductions highlight the caution prominent military bodies impose on AI tools and the steps they are taking to mitigate unwanted AI outcomes.

Similar to the military importance of AI tools, these technologies share similar merits and cautions in the medical sector. AI applications in medicine usually involve using these technologies to aid clinicians in disease diagnoses and suggest treatments. However, in some cases, AI has begun replacing entire departments of formerly human staff.

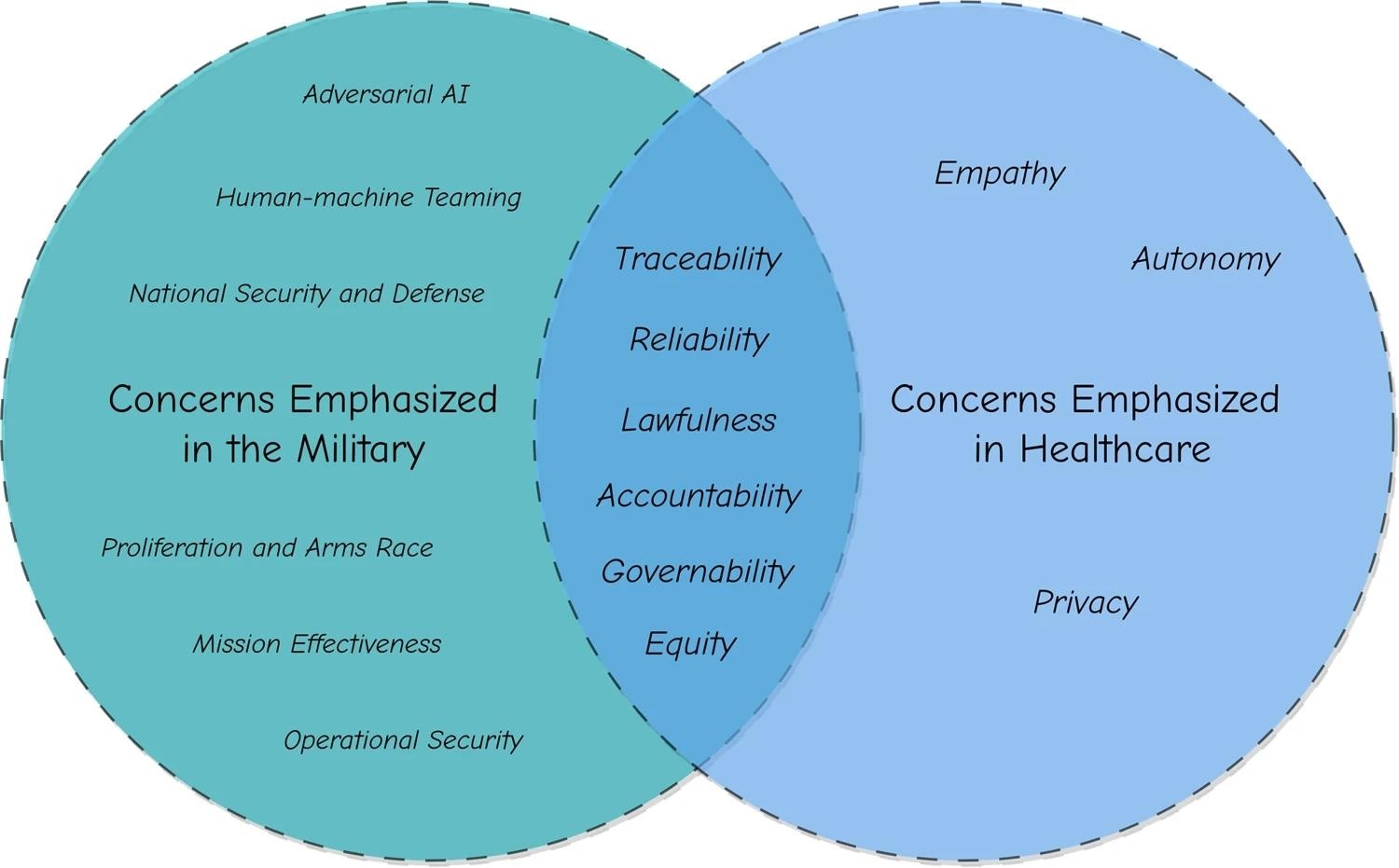

The figure illustrates the commonalities and differences in ethical principles between military and healthcare. In our assessment, traceability, reliability, lawfulness, accountability, governability, and equity are the ethical principles that both fields have in common. At the same time, ethical principles, such as empathy and privacy, are emphasized in healthcare, whereas ethical principles, such as national security and defense, are emphasized in the military.

The figure illustrates the commonalities and differences in ethical principles between military and healthcare. In our assessment, traceability, reliability, lawfulness, accountability, governability, and equity are the ethical principles that both fields have in common. At the same time, ethical principles, such as empathy and privacy, are emphasized in healthcare, whereas ethical principles, such as national security and defense, are emphasized in the military.

Generative AI (GenAI) is a novel type of artificial intelligence (AI) designed to produce new content. It learns patterns from existing data and uses this knowledge to generate new outputs. Despite proving extremely useful in drug discovery, evidence-based medicine summarization, and equipment design, these models are designed to optimize desired ‘black and white’ outcomes without concern for any potential (‘grey’) collateral damages. This necessitates ethically driven curbs on AI results, which, unfortunately, remains under-discussed. Furthermore, malicious use of AI-developed technologies remains rarely considered, much less discussed.

Recently, the World Health Organization (WHO) released a document on ethical considerations in the medical adoption of AI. However, this document remains in its nascency with much work, both research and discussion, pending before trust in AI can sufficiently allow it to take over formerly human roles.

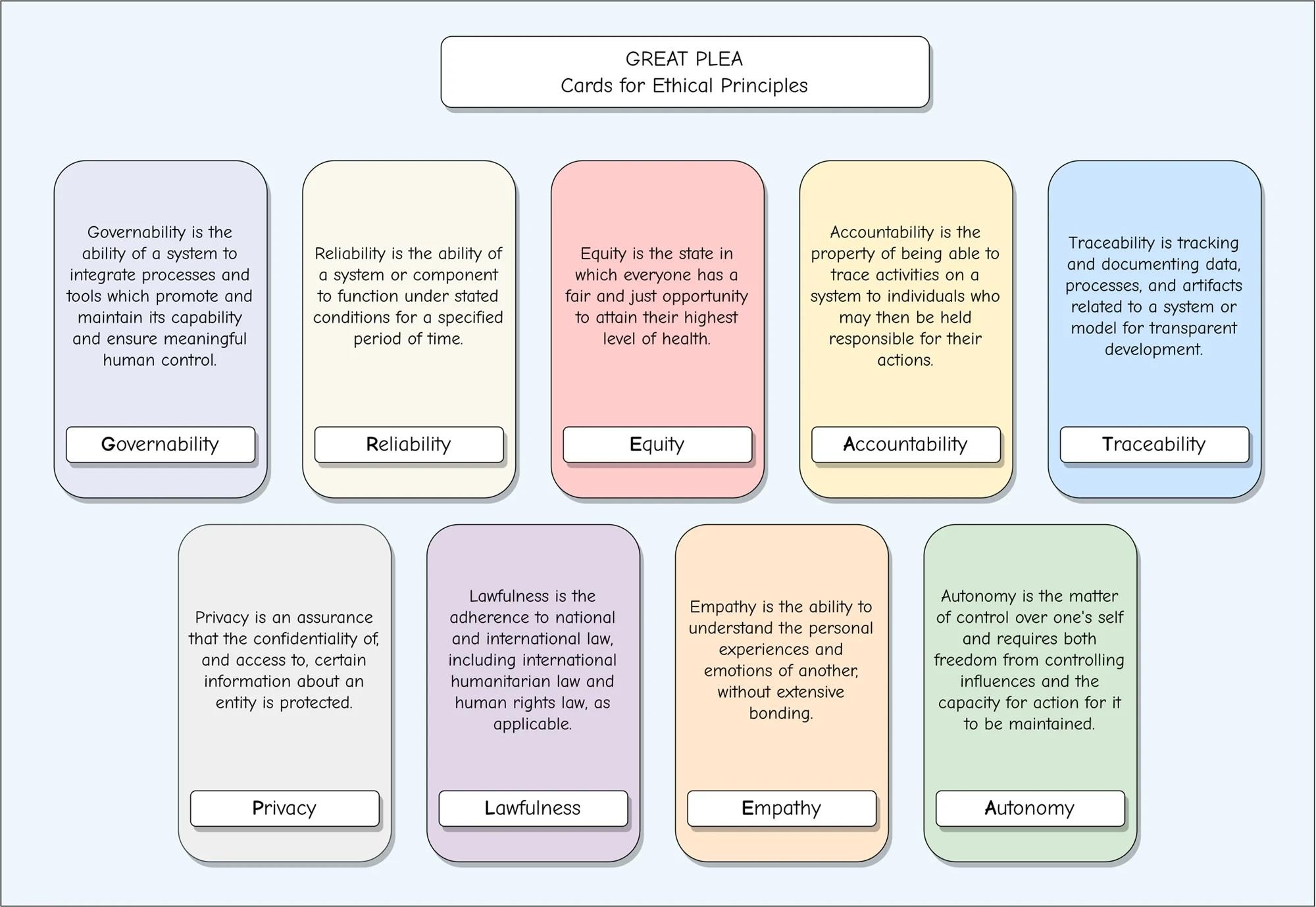

We propose the “GREAT PLEA” ethical principles for generative AI in healthcare, namely Governability, Reliability, Equity, Accountability, Traceability, Privacy, Lawfulness, Empathy, and Autonomy. The GREAT PLEA ethical principles demonstrate our great plea for the community to prioritize these ethical principles when implementing and utilizing generative AI in practical healthcare settings.

We propose the “GREAT PLEA” ethical principles for generative AI in healthcare, namely Governability, Reliability, Equity, Accountability, Traceability, Privacy, Lawfulness, Empathy, and Autonomy. The GREAT PLEA ethical principles demonstrate our great plea for the community to prioritize these ethical principles when implementing and utilizing generative AI in practical healthcare settings.

The current scenario

Since discussions on ethics in AI in the medical field remain controversial and rarely touched upon, most research into bias reductions and algorithm optimizations is focused on the military sphere. Studies and reports, most notably by the RAND Corporation, have aimed to consolidate legal obligations (such as the Geneva Conventions and the Law of Armed Conflict [LOAC]) with public opinion in developing critical guidelines for the ethical development and use of AI algorithms, especially generative AI.

Ongoing military research proposes that, while AI can be used for most military applications, AI is currently not advanced enough to accurately distinguish between combatants and civilians, necessitating a Human-In-The-Loop (HITL) prior to the activation of lethal weapon systems. Simultaneously, the aforementioned principles laid out by NATO and the US DOD have allowed for the development of cutting-edge AI algorithms with ethical considerations baked into their code (for example, erring on the side of caution when collateral damage is a potential outcome of a proposed action).

“The success of these ethical principles has also been demonstrated through their ability to adopt and embed AI mindfully, taking into account AI’s potential dangers, which the Pentagon is determined to avoid.”

Public opinion in the US seems confounding, with some people viewing the autonomous military decision-making of AI systems favorably, while others do not reconcile with the idea of AI initiating combat action without human authorization.

“These results could be due to the perceived lack of accountability, which is considered something that could entirely negate the value of AI, as a fully autonomous system that makes its own decisions distances military operators or clinicians from the responsibility of the system’s actions.”

The GREAT PLEA for AI in clinical settings

In the present work, the authors develop and propose a novel system for the ethical use of generative AI in medical environments. Christened the “GREAT PLEA,” the system encapsulates and builds upon ethical documents published by the US DOD, the American Medical Association (AMA), the WHO, and the Coalition for Health AI (CHAI). NATO’s principles have been notably excluded due to their overdependence on AI’s adherence to International Military Law, which does not apply to the clinical setting. In all else, NATO’s recommendations are nearly identical to those prescribed by the US DOD principles.

The GREAT PLEA is an abbreviation for Governability, Reliability, Equity, Accountability, and Traceability, all of which are common between military and medical domains. Privacy, Lawfulness, Empathy, and Autonomy are concerns emphasized in the healthcare spectrum and form a novel set of considerations hitherto ignored in medical literature.

Since generative AI necessitates guidelines that consider misinformation, potential data bias, and generalized evaluation metrics, these nine principles form the foundation for future AI systems to exist and function with minimal human intervention.

These principles can be enforced through cooperation with lawmakers and the establishment of standards for developers and users, as well as a partnership with recognized governing bodies within the healthcare sector, such as the WHO or AMA.

Journal reference:

- Oniani, D., Hilsman, J., Peng, Y., Poropatich, R. K., Pamplin, J. C., Legault, G. L., & Wang, Y. (2023). Adopting and expanding ethical principles for generative artificial intelligence from military to healthcare. Npj Digital Medicine, 6(1), 1-10, DOI – https://doi.org/10.1038/s41746-023-00965-x, https://www.nature.com/articles/s41746-023-00965-x