I am a Chief Scientific Advisor, Professor in Rehabilitation Sciences, and Director of the Speech and Feeding Disorders Lab at the MGH Institute of Health Professions in Boston, Massachusetts. I am a certified speech-language pathologist and avid researcher, studying speech and swallowing disorders throughout the lifespan.

As I was studying motor control development for speech in children and developing computer-based technologies to quantify that speech, I began interacting with physicians who run ALS clinics. They expressed a need for a technology similar to that which I was using to better measure speech and swallowing in adults with ALS. They had the right technologies and techniques to measure limb movements and walking but struggled to measure and assess the speech system because the muscles are so small and relatively inaccessible, and speech movements are so fast and minute. This kind of measurement traditionally required significant expertise, and they needed more objective measures. From there, I began working on developing computer-based assessment tools for ALS specifically.

Image Credit: Kateryna Kon/Shutterstock.com

Currently, it can take up to 18 months to be diagnosed with ALS, and by the time this arrives, drug therapies are no longer as effective due to the loss of motor neurons. Why is it, therefore, vital to be able to identify ALS earlier in patients?

Early diagnosis is essential for a disease like ALS. Only 15 percent of people who get ALS have a genetic marker that we can identify, so it's crucial to have objective ways for clinicians to assess the condition as early and accurately as possible. Since one-quarter of ALS patients have speech impairment as the first symptom, monitoring for subtle changes could serve as an early warning system.

As ALS progresses, motor neurons responsible for speech, swallowing, breathing, and walking can rapidly deteriorate, but if the disease can be spotted in its early stages, while the motor neurons are still intact, the benefits of interventions are likely to be maximized. The right technologies, such as this one, can also detect changes in patients with greater precision, ultimately facilitating better monitoring of the disease's progression.

You are currently involved in a study to test the effectiveness of a digital health app for ALS. Can you tell us more about this study and what its aims are?

The National Institutes of Health (NIH) awarded my team, in conjunction with the app developer Modality.AI, a grant to determine if data collected on speech from an app is as effective or more effective than the observations of clinical experts who assess and treat speech and swallowing problems due to ALS.

The data collected from the app will be compared to results obtained from state-of-the-art laboratory techniques used to measure speech that are expensive and complicated to use. If the results match the results from clinicians and their state-of-the-art equipment, we will know they have a valid approach.

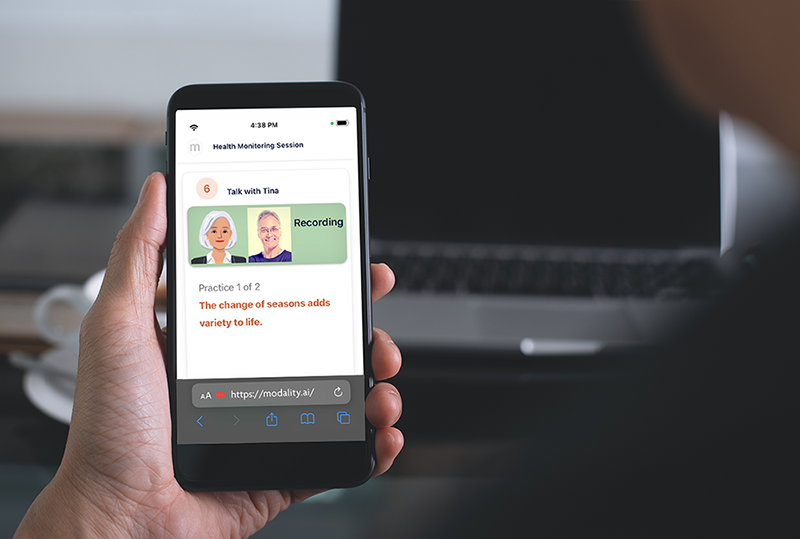

Image Credit: Modality.AI

The app itself features a virtual agent, Tina. How is this virtual agent able to obtain speech data information?

Using the application is as simple as clicking a link. The patient receives an email or text message indicating it is time to create a recording. Clicking a link activates the camera and microphone, and Tina, the AI virtual agent, begins giving instructions. The patient then is asked to count numbers, repeat sentences, and read a paragraph, for example. All the while, the app is collecting data to measure variables from the video and audio signals, such as speed of lip and jaw movements, speaking rate, pitch variation, and pausing patterns.

Tina decodes information from speech acoustics and speech movements, extracted automatically from full-face video recordings obtained during the assessment. Computer vision technologies – such as face tracking – provide a non-invasive way to accurately record and compute features from large amounts of data from facial movements during speech.

What information will this health app be able to provide patients? What are the advantages for patients of having all of this information available to them?

Changes in speech are common with ALS, but the rate of progression of ALS varies from person to person. Patients report declining ability to speak to be among the worst effects of the disease. The app will allow patients to document their speech progression remotely. Service providers will use this information to help patients and their families make informed decisions throughout the course of the disease.

As speech-language pathologists, we want to optimize communication for as long as possible. And teaching patients to use alternative modes of communication early is more effective than waiting until they have lost the ability to speak. In addition, confirming a diagnosis early will provide patients ample time to begin message and voice banking so that their own voice can be used in a text-to-speech (TTS) or speech-generating device (SGD). There are additional advantages for patients, including decreased costs and eliminating the need for patients to travel to clinics for a speech assessment.

Lastly, the app generally requires patient engagement for only a few minutes per week, thus saving time, and expense, requiring less energy than a clinical exam and the time and delays involved in coordinating an appointment and traveling to a healthcare facility. Lack of early diagnosis and objective measures are two issues that have hindered treatment progress. Early diagnosis is critical in a rapidly progressing disease.

As well as the advantages it offers to patients, what advantages could it offer for healthcare providers?

The app will allow clinicians to access their patient's data remotely and, in and of itself, will keep track of the progression of speech, allowing the provider to manage and monitor speech without requiring frequent in-person visits. This level of accessibility will allow clinicians to monitor patients more regularly, draw more accurate conclusions about treatment, and determine the best possible care plan. This makes the entire process simpler and removes the burden from the patient and provider while reducing resource use for clinical services. The app's increased precision and efficiency will also be particularly appealing to clinical scientists and companies using speech patterns as outcome measures in ALS drug trials.

In this study, you have joined up with technology firm Modality.AI. How important are these types of collaborations in bringing new scientific ideas and technologies into the world?

I jumped at the opportunity to work with Modality.AI. The team members have unique and extensive histories of developing AI speech applications and commercial interest in implementing this technology into mainstream health care and clinical trials. New technologies are particularly at risk of failing when a commercial entity does not support them, so this relationship was critical to our overall goals for the study.

I predict these types of collaborations will grow in popularity in the health tech space and will have an increasingly significant impact on studies like this one.

Image Credit: elenabsl/Shutterstock.com

Artificial Intelligence (AI) has seen a huge increase in its adoption in recent years. Why is this, and do you believe we will continue to see AI becoming an integral aspect of healthcare?

AI plays a very important role in identifying conditions that are difficult for our human minds to comprehend because most health problems are multi-dimensional and very complicated, often impacting multiple body parts and a variety of symptoms that change over time.

Machine learning is a perfect solution for diagnosing and monitoring certain health conditions because there is so much data to take in. These machines can process this data and define patterns in ways that human eyes and ears aren't capable of detecting to the same degree of accuracy.

Utilizing AI and machine learning in this way will also present a challenge. For these models to be accurate and work properly in the way we'd want, they must be trained. Acquiring the training data required to make these models accurate will be a tall task. For example, to train a machine to make assessments accurately, hundreds or thousands of examples of a specific condition may be required for the algorithm to be trained on it and "learn" it. For this purpose, this data needs to be collected and then very carefully selected. This lack of data proves to be a bottleneck.

While AI has been proven invaluable in the medical field, it will not replace clinicians. Human practitioners offer unparalleled personalized care, decision-making, and overarching patient support and cannot be replaced.

What’s next for you and your study?

Currently, a few patient advocacy groups are piloting the app and giving it to patients. Based on the structure of the grant we received from the NIH, we will continue to work on the app to meet set benchmarks over the next three years to continue in the grant cycle. Phase I will take one year and Phase II, two years.

About Dr. Jordan Green

Dr. Green, who has been at the MGH Institute since 2013, is a speech-language pathologist who studies biological aspects of speech production. He teaches graduate courses on speech physiology, and the neural basis of speech, language, and hearing. As Chief Scientific Advisor in the IHP Research Department, he works with the Associate Provost for Research in the areas of recruitment, strategic planning, and a variety of special projects. He also serves as Director of the Speech and Feeding Disorders Lab (SFDL) at the Institute. He has been appointed the inaugural Matina Souretis Horner Professor in Rehabilitation Sciences. His research focuses on disorders of speech production, oromotor skill development for early speech and feeding, and quantification of speech motor performance. His research has been published in national and international journals including Child Development, Journal of Neurophysiology, Journal of Speech and Hearing Research, and the Journal of the Acoustical Society of America. He has served on multiple grant review panels at the National Institutes of Health. In 2012, he was appointed as a Fellow of the American Speech-Language-Hearing Association and in 2015, Dr. Green received the Willard R. Zemlin award in Speech Science.

His work has been funded by the National Institute of Health (NIH) since 2000. He is a prolific contributor to important journals, with over 100 peer-reviewed publications. He has presented his work internationally and nationally. He is an advisor for several IHP doctoral students, has directed ten Ph.D. dissertations, and has supervised eleven post-doctoral fellows. He also is an editorial consultant for numerous journals and has served on multiple NIH grant review panels.